A Mixed Blessing II: MoE for Images and Video

8/18/2025First published at the Synthesis AI blog

In this second installment of the MoE series, we venture beyond text to explore how MoE architectures are revolutionizing computer vision, video generation, and multimodal AI. From V-MoE pioneering sparse routing for image classification through DiT-MoE’s efficient diffusion models, CogVideoX’s video synthesis, and Uni-MoE’s five-modality orchestration, to cutting edge research from 2025, we examine how the principle of conditional computation adapts to visual domains. We also consider the mathematical foundations with variational diffusion distillation and explore a recent promising hybrid approach known as Mixture-of-Recursions. We will see that MoE is not just a scaling trick but a fundamental design principle—teaching our models that not every pixel needs every parameter, and that true efficiency lies in knowing exactly which expert to consult when.

Introduction

In the first post on Mixture-of-Experts (MoE), we explored how MoE architectures revolutionized language modeling by enabling sparse computation at unprecedented scales. We saw how models like Switch Transformer and Mixtral achieved remarkable parameter efficiency by routing tokens to specialized subnetworks, fundamentally changing our approach to scaling neural networks. But text is just one modality, albeit a very important one. What happens when we apply these sparse routing principles to images, videos, and the complex interplay between different modalities?

It might seem that the transition from text to visual domains is just a matter of swapping in a different embedding layer, and indeed we will see some methods that do just that. But challenges go far beyond: images arrive as two-dimensional grids of pixels rather than linear sequences of words, while videos add another temporal dimension, requiring models to maintain consistency across both space and time. Multimodal scenarios demand that models not only process different types of information but also understand the subtle relationships between them, aligning the semantic spaces of text and vision, audio and video, or even all modalities simultaneously.

Yet despite these challenges, the fundamental intuition behind MoE—that different parts of the input may benefit from different computational pathways—proves even more useful in visual and multimodal settings. A background patch of sky requires different processing than a detailed face; a simple pronoun needs less computation than a semantically rich noun phrase; early diffusion timesteps call for different expertise than final refinement steps. This observation has led to an explosion of works that adapt MoE principles to multimodal settings.

In this post, we review the evolution of MoE architectures beyond text, starting with pioneering work in computer vision such as V-MoE, exploring how these ideas transformed image and video generation with models like DiT-MoE and CogVideoX, and examining the latest results from 2025. We survey MoE through the lens of allocation: what gets routed, when routing matters in the generative process, which modality gets which capacity, and even how deep each token should think. We will also venture into the mathematical foundations that make some of these approaches possible and even explore how MoE concepts are merging with other efficiency strategies to create entirely new architectural paradigms such as the Mixture-of-Recursions.

MoE Architectures for Vision and Image Generation

The jump from text tokens to image patches is not as trivial as swapping the embedding layer. Pixels arrive in two spatial dimensions, self‑attention becomes quadratic in both area and channel width, and GPUs are suddenly starved for memory rather than flops. Yet the same divide‑and‑conquer intuition that powers sparse LLMs has proven to be equally fruitful in vision: route a subset of patches to a subset of experts, and you’re done. Below we consider two milestones—V‑MoE as an example of MoE for vision and DiT‑MoE as an example of MoE for image generation—and another work that is interesting primarily for its mathematical content, bringing us back to the basic idea of variational inference.

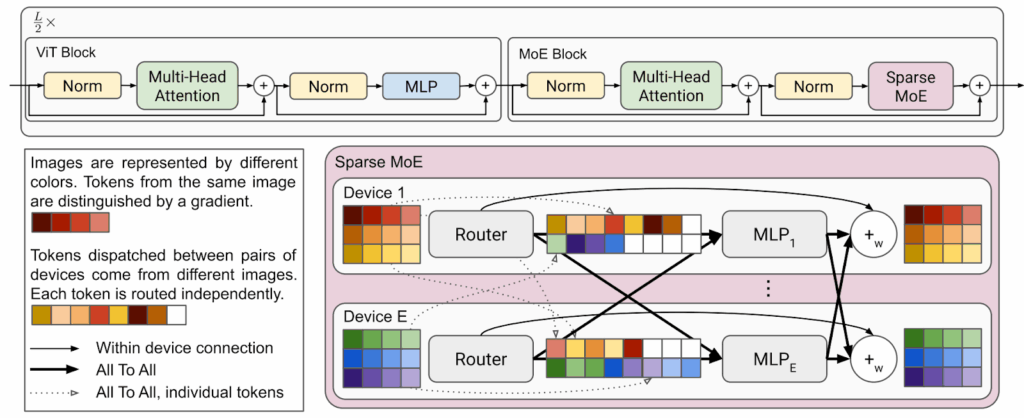

V-MoE. Google Brain researchers Riquelme et al. (2021) were the first to present a mixture-of-experts approach to Transformer-based computer vision. Naturally, they adapted the Vision Transformer (ViT) architecture by Dosovitsky et al. (2020), and their basic architecture is just ViT with half of its feedforward blocks replaced by sparse MoE blocks, just like the standard MoE language models that we discussed last time:

Probably the most interesting novel direction explored by Riquelme et al. (2021) was that MoE-based vision architectures are uniquely flexible: you can tune the computational budget and resulting performance by changing the sparsity of expert activations. A standard (vanilla) routing algorithm works as follows:

- the input is a batch of

images composed of

tokens each, and every token has a representation of dimension

, so the input is

;

- the routing function

assigns routing weights for every token, so

is the weight of token

and expert

;

- finally, the router goes through the rows of

and assigns each token to its most suitable expert (top-1) if the expert’s buffer is not full, then goes through the rows again and assigns each unassigned token to its top-2 expert if that one’s buffer is not full, and so on.

The Batch Prioritized Routing (BPR) approach by Riquelme et al. (2021) tries to favor the most important tokens and discard the rest. It computes a priority score for every token based on the maximum routing weight, either just as the maximum weight

or as the sum of top-k weights for some small

. Essentially, this assumes that important tokens are those that have clear winners among the experts, while tokens that are distributed uniformly probably don’t matter that much. The BPR algorithm means that the tokens are first ranked according to

, using the priority scores as a proxy for the priority of allocation. Tokens that have highly uneven distributions of expert probabilities get routed first when experts have bounded capacity.

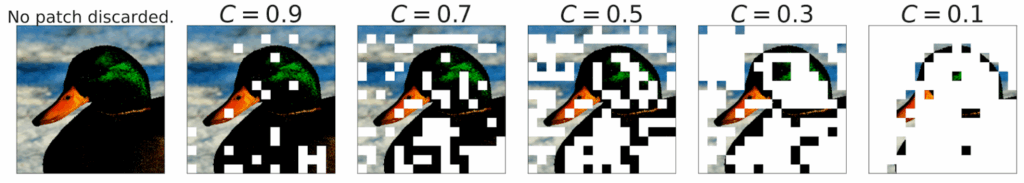

To adjust the capacity of experts, the authors introduce capacity ratio, a constant that controls the total size of an expert’s buffer. For

experts, the buffer capacity of each is defined as

Here is an illustration of how small values of work on the first layer, where tokens are image patches; you can see that a trained V-MoE model indeed understands very well which patches are most important for image classification:

So by setting you can control the computational budget of the MoE model, and it turns out that you can find tradeoffs that give excellent performance at a low price. In the figure below, on the left you can see different versions of V-MoE (for different

) and how they move the Pareto frontier compared to the basic ViT. On the right, we see that BPR actually helps improve the results compared to vanilla routing, especially for

when V-MoE starts actually dropping tokens (the plots are shown for top-2 routing, i.e.,

):

While V-MoE was the first to demonstrate the power of sparse routing for image classification, the next frontier lay in generation tasks. Creating images from scratch presents fundamentally different challenges than recognizing them, yet the same principle of selective expert activation proves equally valuable.

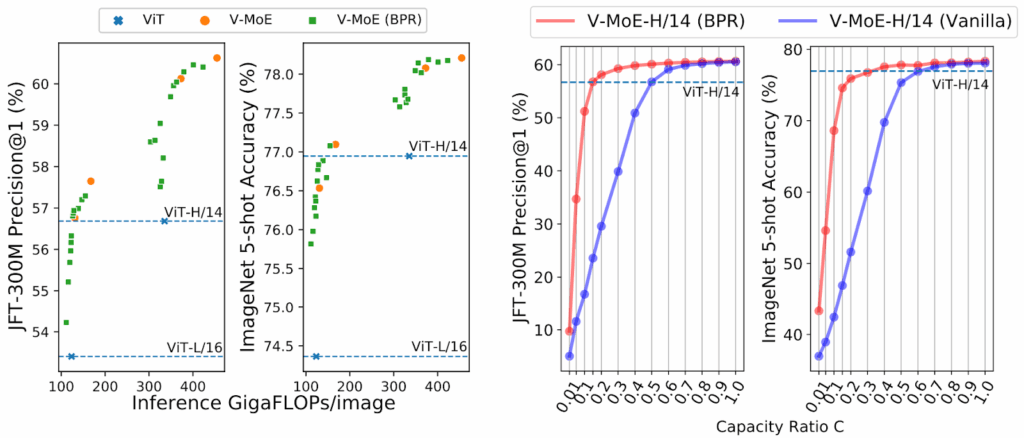

DiT-MoE. The Diffusion Transformer (DiT; Peebles, Xie, 2022) was a very important step towards scaling diffusion models for image generation to real life image sizes. It replaced the original convolutional UNet-based architectures of diffusion models with Transformers, which led to a significant improvement in the resulting image quality.

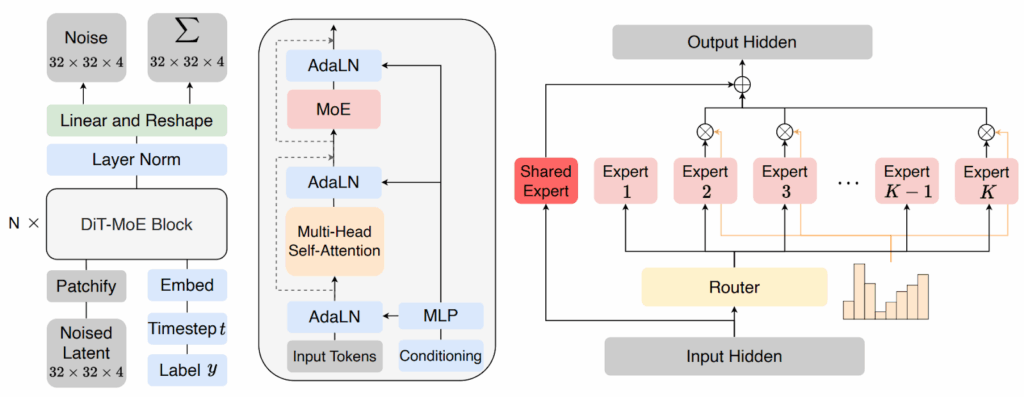

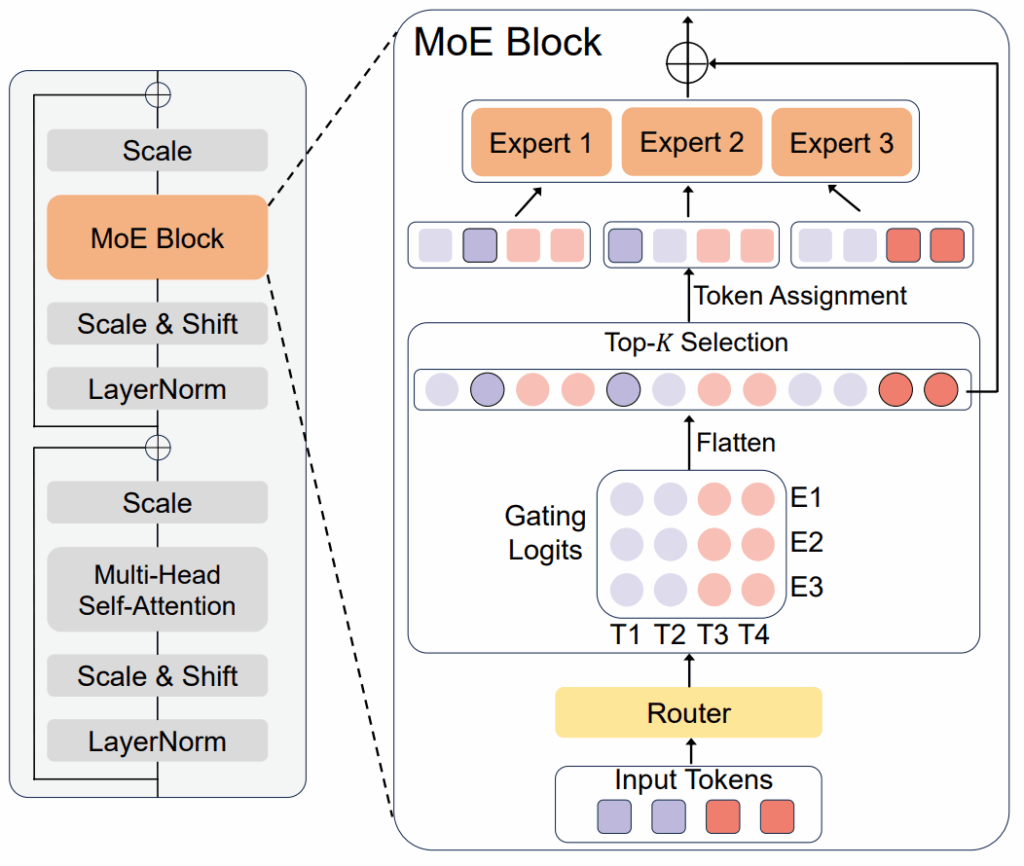

Fei et al. (2024) made the logical next step of combining the DiT with the standard MoE approach inside feedforward blocks in the Transformer architecture. In general, the DiT-MoE follows the DiT architecture but replaces each MLP with a sparsely activated mixture of MLPs, just like V-MoE did for the ViT:

They also introduce some small number of shared experts and add a load balancing loss that has been a staple of MoE architectures since Shazeer et al. (2017):

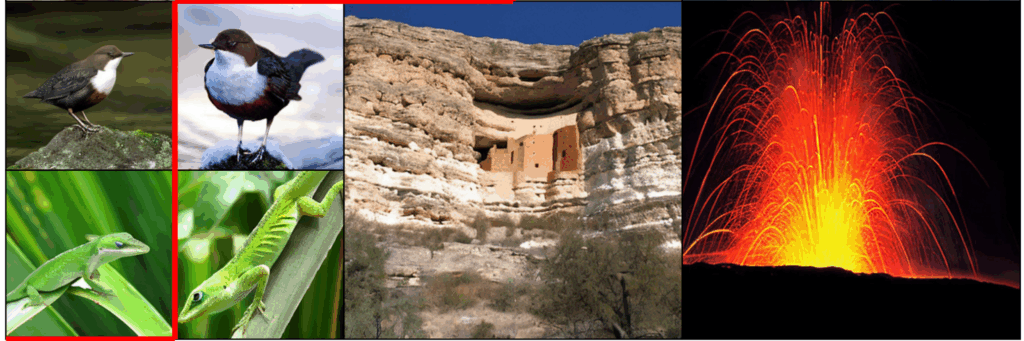

As a result, their DiT-MoE-XL version, which had 4.1B total parameters but activated only 1.5B on each input, significantly outperformed Large-DiT-3B, Large-DiT-7B, and LlamaGen-3B (Sun et al., 2024) that all had more activations and hence more computational resources needed per image. Moreover, they could scale the largest of their models to 16.5B total parameters with only 3.1B of them activated per image, achieving a new state of the art in image generation. Here are some sample generations by Fei et al. (2024):

Interestingly, MoE sometimes helps more in image diffusion than in classification because diffusion’s noise‑conditioned stages are heterogeneous: features needed early (global structure) differ from late (high‑freq detail). MoE lets experts specialize by timestep and token type, which improves quality at no cost to the computational budget. This “heterogeneity‑exploiting” view also explains Diff‑MoE and Race‑DiT that we consider below. But first, to truly understand the theoretical foundations that make these approaches possible, allow me to take a brief detour into the mathematical machinery that powers some of the most sophisticated MoE variants.

A Mathematical Interlude: Variational Diffusion Distillation

For me, it is always much more interesting to see mathematical concepts come together in unexpected ways than to read about a well-tuned architecture that follows the best architectural practices and achieves a new state of the art by the best possible tweaking of all hyperparameters.

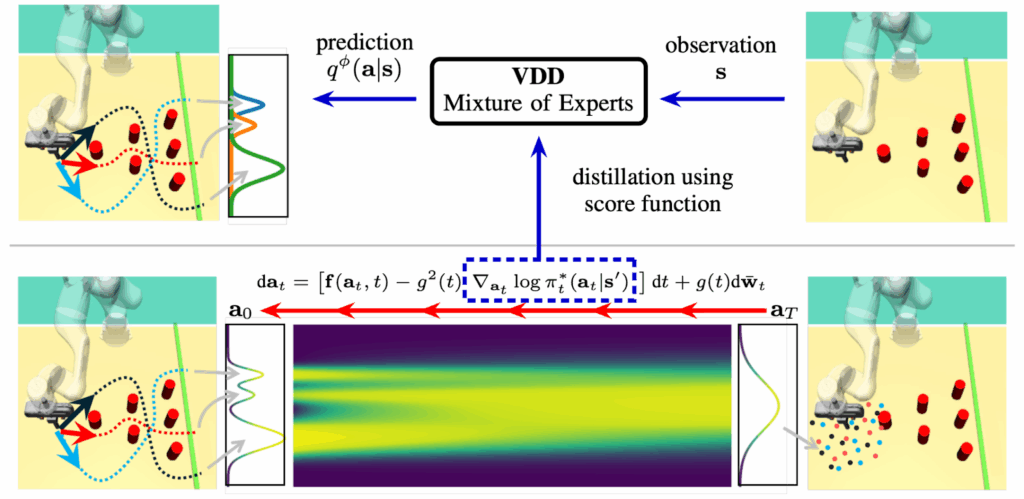

So even though it may not be the most influential paper in this series, I cannot miss the work of Zhou et al. (2024) from Karlsruhe Institute of Technology who present the Variational Diffusion Distillation (VDD) approach that combines diffusion models, Gaussian mixtures, and variational inference. You can safely skip this section if you are not interested in a slightly deeper dive into the mathematics of machine learning.

Their basic motivation is that while MoEs are great for complex multimodal tasks such as robot behaviours (Zhou et al. come from the perspective of robotics, so all pictures in this part will show some robots), they are hard to train due to stability issues. On the other hand, diffusion models had recently achieved some great results for representing robot policies in Learning from Human Demonstrations (LfD; Lin et al., 2021) but they have very long inference times and intractable likelihoods; I discussed this in my previous posts on diffusion models.

So the solution would be to train an inefficient diffusion model for policy representation first and then distill it to a mixture of experts that would be faster and would have tractable likelihood; here is an illustration by Zhou et al. (2024):

The interesting part is how they do this distillation. If you have a diffusion policy and a new policy representation

with parameters

, the standard variational approach would lead to minimizing the Kullback-Leibler divergence

, i.e.,

- amortized variational inference means that we learn a single conditional model

instead of learning a separate

for every state;

- stochastic variational inference (Hoffman et al., 2013) uses mini-batch computations to approximate the expectation

.

This would be the standard approach to distillation, but there are two problems in this case:

- first, we don’t know

because diffusion models are intractable, we can only sample from it;

- second, the student is a mixture of experts

and it is difficult to train a MoE model directly.

To solve the first problem, Zhou et al. (2024) note that they have access to the score functions of the diffusion model from the teacher:

Using the reparametrization trick, they replace the gradients with

For the second problem, the objective can be decomposed so each expert can be trained independently. Specifically, the authors construct an upper bound for that can be decomposed into individual objectives for every expert. In this way, we can then do reparametrization for each expert individually, while reparametrizing a mixture would be very difficult if not impossible.

Using the chain rule for KL divergences, they begin with

Since KL is nonnegative, is obviously an upper bound for

, and this means that we can now organize a kind of expectation-maximization scheme for optimization. On the M-step, we update

in two steps:

- update the experts, minimizing

individually for every expert with what is basically the standard reverse KL objective but for one expert only;

- update the gating mechanism’s parameters, minimizing

with respect to

:

There is another technical problem with the latter: we cannot evaluate . To fix that, Zhou et al. (2024) note that

is a simple categorical distribution and does not really need the good things we get from variational inference. The main thing about variational bounds is that they let us estimate

, which has important properties for situations where

is very complex and

is much simpler, as is usually the case in machine learning (recall, e.g., my post on variational autoencoders).

But in this case both and

are general categorical distributions, so it’s okay to minimize

, which is basically the maximum likelihood approach. Specifically, the optimization problem for

is

The E-step, on the other hand, is really straightforward: the problem is to minimize

I hope you’ve enjoyed this foray into the mathematical side of things. I feel it’s important to remind yourself from time to time that things we take for granted such as many objective functions actually have deep mathematical meaning, and sometimes we need to get back to this meaning in order to tackle new situations.

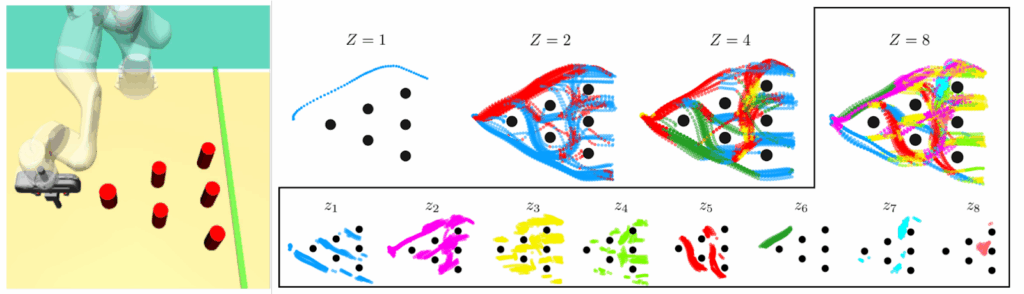

As for the results, all of these tricks allow to distill denoising diffusion policies into mixtures of experts. The point of this work is not to improve action selection policies, it is to make them practical because it’s absolutely impossible to do a reverse diffusion chain every time your robot needs to move an arm. And indeed, after distillation the resulting MoE models not only solve tasks quite successfully but also learn different experts. The illustration below shows that as the number of experts grows the experts propose different trajectories for a simple obstacle avoiding problem:

MoE for Video Generation

The leap from image to video generation is in many ways a computational challenge: each video contains hundreds and thousands of high-resolution images, requiring a steep scaling of resources. Moreover, video generation must maintain temporal coherence: objects must move smoothly, lighting must remain consistent, and characters must retain their identity across hundreds of frames. Traditional approaches have involved various compromises: generating at low resolution and upscaling, processing frames independently and hoping for the best, or limiting generation to just a few seconds. But as we will see in this section, MoE architectures can offer more elegant solutions.

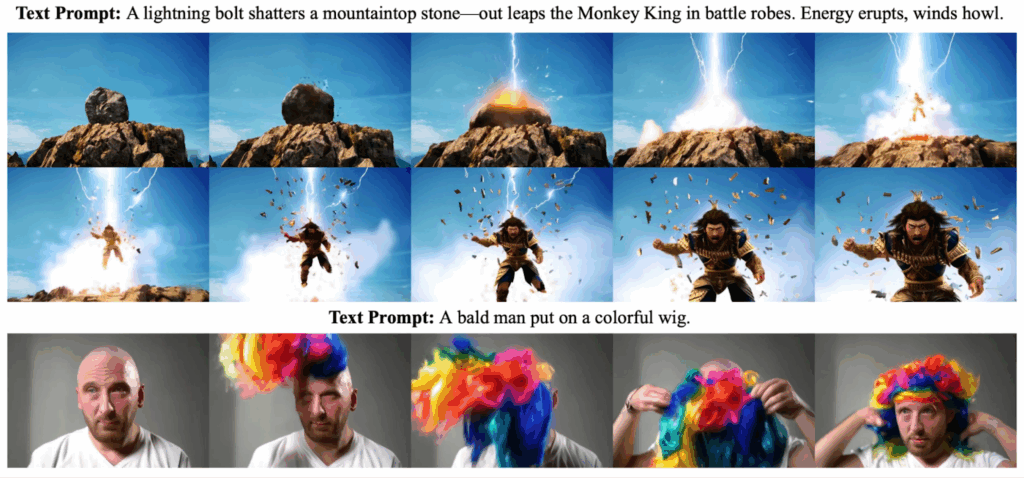

CogVideoX. Zhipu AI researchers Yang et al. (2025) recently presented the CogVideoX model based on DiT that is able to generate ten-second continuous videos with resolutions up to 768×1360 pixels. While their results are probably not better than Google Veo 3, they are close enough to the frontier:

And unlike Veo 3, this is a text-to-video model that we can actually analyze in detail, so let’s have a look inside.

From the bird’s eye view, CogVideoX attacks the bottleneck of high-resolution, multi-second video generation from text. There are several important problems here that have plagued video generation models ever since they appeared:

- sequence length explosion: if we treat a 10-second HD video clip as a flat token grid, we will get GPU-prohibitive input sizes;

- weak text-video alignment: standard DiT-based models often have difficulties in fusing the text branch and visual attention, so often you would get on-topic but semantically shallow clips;

- temporal consistency: even large latent-video diffusion models have struggled with preserving consistency beyond ~3 seconds, leading to flickers and changing characters and objects in the video.

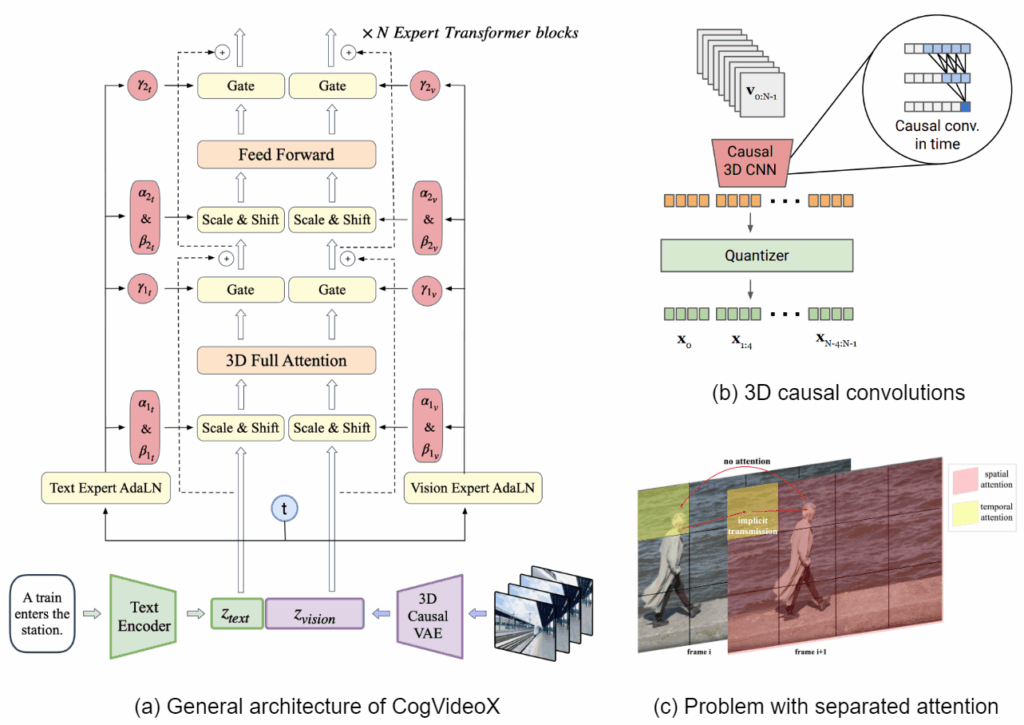

To solve all this, CogVideoX combines two different architectures:

- a 3D causal variational autoencoder (VAE) that compresses space and time;

- an Expert Transformer with expert-adaptive layer normalization layers to fuse language and video latents.

Here is a general illustration that we will discuss below; part (a) shows the general architecture of CogVideoX:

The components here are quite novel as well. The 3D causal VAE, proposed by Yu et al. (2024), does video compression with three-dimensional convolutions, working across both space and time. The “causal” part means that unlike standard ViT-style architectures, convolutions go only forward in time, causally; part (b) above shows an illustration of 3D causal CNNs by Yu et al. (2024).

In the Expert Transformer, the main new idea is related to the differences between the text and video modalities. Their feature spaces may be very different, and it may be impossible to align them with the same layer normalization layer. Therefore, as shown in part (a) above, there are two separate expert AdaLN layers, one for text and one for video.

Finally, they move from separated or sparsified spatial and temporal attention to full 3D attention, often used previously to save on compute. The problem with separated attention, though, is that it leads to flickers and inconsistencies: part (c) of the figure above shows how the head of the person in the next frame cannot directly attend to his head in the previous frame, which is often a recipe for inconsistency. Yang et al. (2025) note “the great success of long-context training in LLMs” and propose a 3D text-video hybrid attention mechanism that allows to attend to everything at once.

The result is a 5B-parameter diffusion model that can synthesize 10 second clips at 768×1360 resolution in 16fps while staying coherent to the prompt and maintaining large-scale motion. You can check out their demo page for more examples.

Multimodal MoE: Different Experts Working Together

Text-to-image and text-to-video models that we have discussed are also multimodal, of course: they take text as input and output other modalities. But in this section, I’d like to concentrate on multimodal MoE defined as models where the same mixture of experts has different experts processing different modalities.

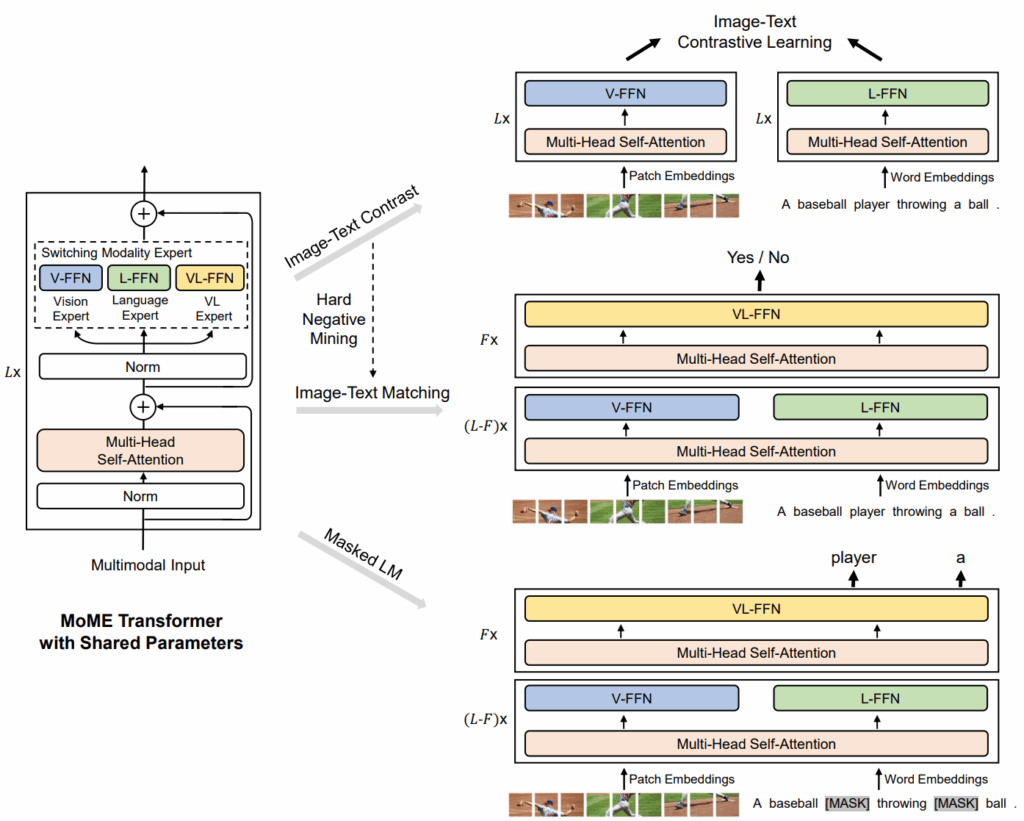

VLMo. Microsoft researchers Bao et al. (2021) introduced their unified vision-language pretraining with mixture-of-modality-experts (VLMo) as one of the first successful such mixtures.

Before VLMo, vision–language models had followed two main paradigms:

- dual encoders, e.g., CLIP or ALIGN, where an image encoder and text encoder are processing their modalities separately, trained with contrastive learning; these models are very efficient for retrieval but weak at deep reasoning since text and images do not mix until the very end;

- fusion encoders, e.g., UNITER or ViLBERT, which use image and text tokens via cross-modal attention; these models are stronger on reasoning and classification but retrieval is quadratic since every text-image pair has to be encoded jointly and thus separately.

The insight of Bao et al. (2021) was to unify both in a single architecture. The key novelty is replacing the standard Transformer FFN with a pool of modality-specific experts:

- vision expert (V-FFN) processes image patches,

- language expert (L-FFN) processes text tokens, and

- vision-language expert (VL-FFN) processes fused multimodal inputs.

You can see the architecture on the left in the main image below:

Each Transformer block still has shared multi-head self-attention across modalities, which is crucial for text-image alignment, but the FFN part is conditional: if the input is image-only, use V-FFN, If text-only, use L-FFN, and if it contains both, bottom layers use V-FFN and L-FFN separately, while top layers switch to VL-FFN for fusion. Thus, the same backbone can function both as a dual encoder for retrieval and as a fusion encoder for classification/reasoning.

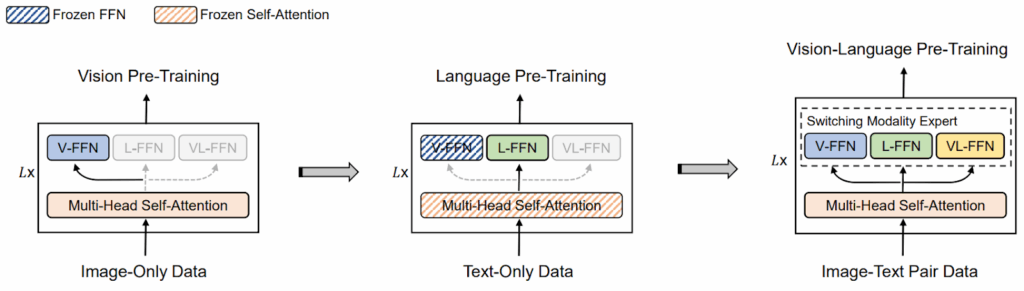

Similarly, VLMo uses stagewise pretraining to use data from both modalities, not just paired datasets:

Note that unlike classical MoE exemplified, e.g., in the Switch Transformer, VLMo is not about sparse routing among dozens of experts. Instead, it is a structured, modality-aware MoE with exactly three experts tied to modality types, and routing is deterministic, depending on the input type. So VLMo was less about scaling and more about efficient multimodal specialization.

As a result, Bao et al. got a model that was strong in both visual question answering and retrieval. But this was only the beginning.

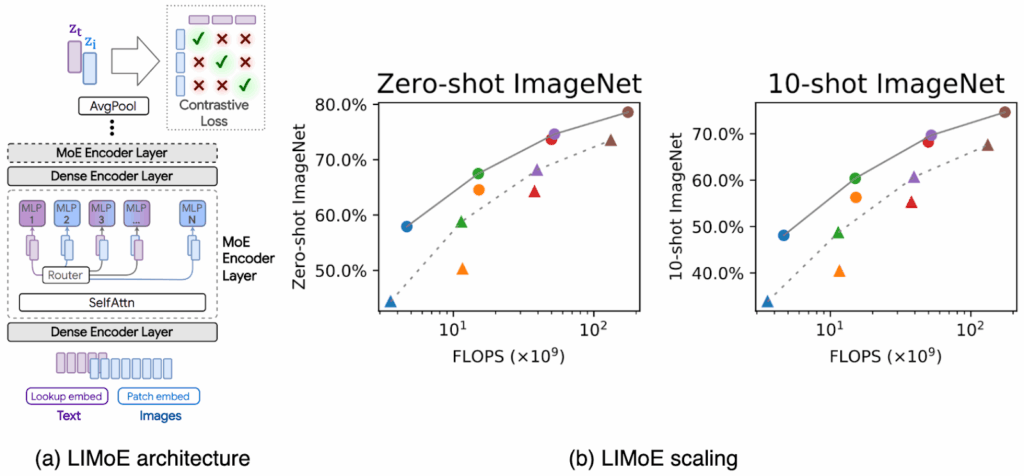

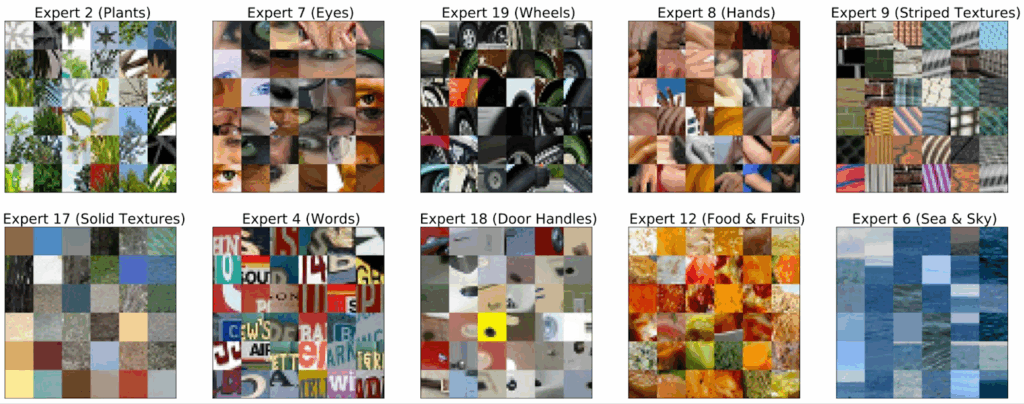

LIMoE. The next step was taken by Google Brain researchers Mustafa et al. (2022) who used multimodal contrastive learning in their Language-Image MoE (LIMoE) architecture. Their central idea was to build a single shared Transformer backbone for both images and text, sparsely activated by a routing network, so that different experts can emerge naturally for different modalities. The main architecture is shown in (a) in the figure below:

Note that unlike VLMo’s deterministic modality experts, here experts are modality-agnostic: the router decides dynamically which expert to use per token. Over training, some experts specialize in vision, others in language, and some in both, and this is an emergent specialization, not a hardcoded one.

The multimodal setting also led to new failure modes, specifically:

- imbalanced modalities: in retrieval-type settings, image sequences are much longer than text (hundreds of patches vs ~16 tokens); without extra care, text tokens all collapse into one expert, which quickly saturates capacity and gets dropped;

- expert collapse: classic MoE auxiliary losses such as importance and load balancing were not enough to ensure balanced expert usage across modalities.

Therefore, LIMoE introduced novel entropy-based regularization with a local entropy loss that encourages each token to commit strongly to one/few experts and a global entropy loss that enforces diversity across tokens so all experts get used. Another important idea was batch priority routing (BPR) which sorts tokens by routing confidence, ensuring that high-probability tokens (often rare text tokens) do not get dropped when experts overflow. These were crucial for stable multimodal MoE training, and Mustafa et al. (2022) showed that their architecture could scale to better results as the backbones got larger, as illustrated in part (b) of the figure above.

Interestingly, in the resulting trained MoE model experts took on semantic specialization as well. The image below illustrates the specializations of some vision experts (Mustafa et al., 2022):

Overall, LIMoE was the first true multimodal sparse MoE. It showed that with some novelties like entropy regularization and BPR, a single shared backbone with routing can match (or beat) two-tower CLIP-like models. It also demonstrated emergent expert specialization: some experts become image-only, some text-only, some multimodal, and there is further semantic specialization down the line.

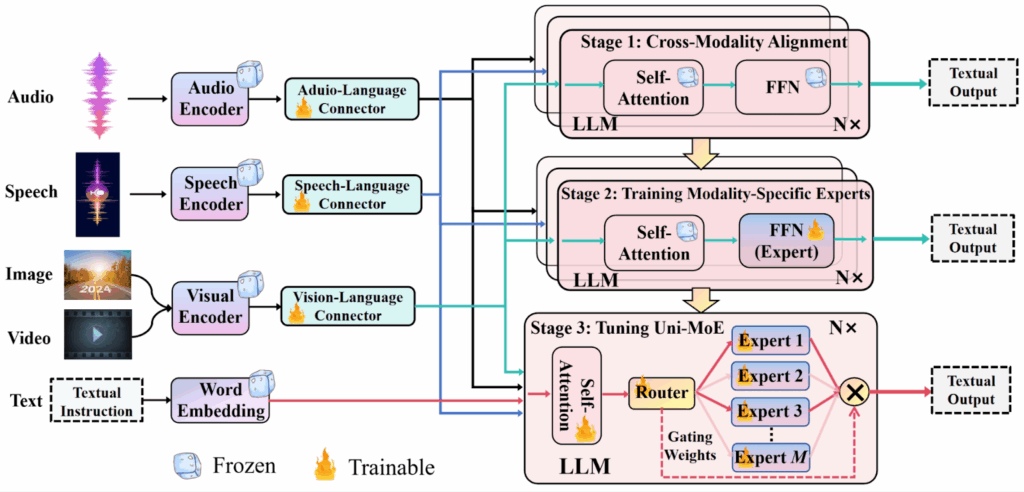

Uni-MoE. By 2024, the ML community had two popular streams of models: dense multimodal LLMs such as LLaVA, InstructBLIP, or Qwen-VL that could handle images and text and sometimes extended to video/audio (with a large computational overhead), and sparse MoEs that we have discussed: GShard, Switch, LIMoE, and MoE-LLaVA. The latter showed efficiency benefits, but were typically restricted to 2-3 modalities.

The ambition of Li et al. (2025) was to build the first unified sparse MoE multimodal LLM that could handle text, image, video, audio, and speech simultaneously, all the time preserving efficient scaling. To achieve that, they introduced:

- modality-specific encoders such as CLIP for images, Whisper for speech, BEATs for audio, and pooled frames for video; their outputs were projected into a unified language space via trainable connectors, so every modality was represented as “soft tokens” compatible with an LLM backbone;

- sparse MoE inside the LLM, where each Transformer block has shared self-attention but replaces the FFN with a pool of experts, with token-level routing;

- lightweight adaptation: instead of fully updating all parameters, Uni-MoE used LoRA adapters on both experts and attention blocks, making fine-tuning feasible on multimodal data.

Moreover, Uni-MoE used a novel three-stage curriculum, progressing from cross-modality alignment training connectors with paired data through training modality-specific experts to unified multimodal fine-tuning. Here is the resulting architecture and training scheme from Li et al. (2025):

As a result, Uni-MoE beat state of the art dense multimodal baselines in speech-image/audio QA, long speech understanding, audio QA and captioning, and video QA tasks. Basically, Uni-MoE presented a general recipe for unified multimodal MoEs, not just image-text, demonstrating that sparse MoE reduces compute while handling many modalities, experts can be both modality-specialized and collaborative, and with progressive training MoE can avoid collapse and bias: Uni-MoE was robust enough even on long, out-of-distribution multimodal tasks.

Having established the foundations of MoE across different modalities and their combinations, we now turn to the cutting edge: works from 2025 that push these ideas in entirely new directions, from semantic control to recursive architectures.

Recent Work on Mixtures of Experts

In the previous sections, we have laid out the foundations of mixture-of-experts models in image, video, and multimodal settings, considering the main works that started off these research directions. In this last section, I want to review several interesting papers that have come out in 2025 and that introduce new interesting ideas or whole new directions for MoE research. Let’s see what are the hottest directions in MoE research now!

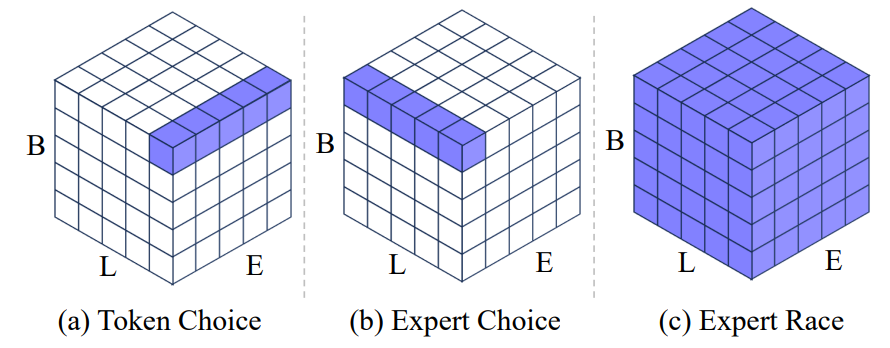

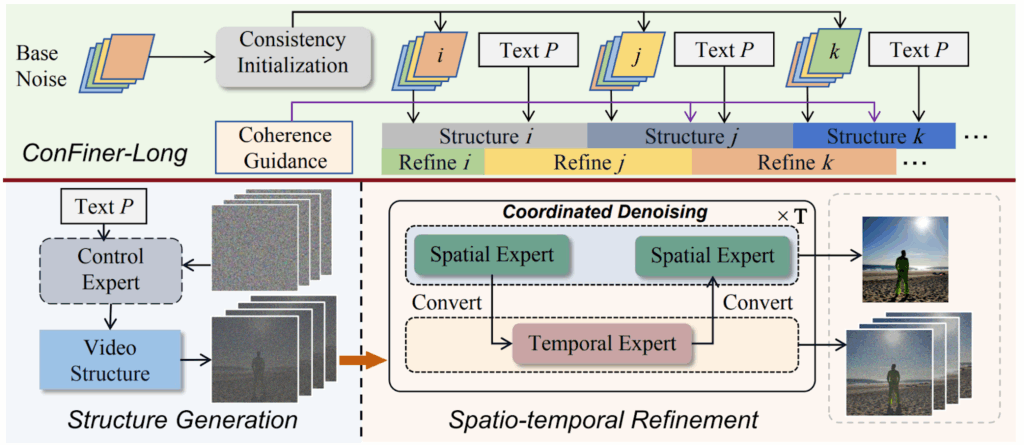

Expert Race. Yuan et al. (2025) concentrate on the problem of rigid routing costs and load imbalance in previous work on MoE models, specifically on MoE-DiT. As we have seen throughout these posts, load balancing is usually done artificially, with an auxiliary loss function. Specifically in DiT, moreover, adaptive layer normalization amplifies deep activations, which may lead to gradients vanishing in early blocks, and MoE-DiT often suffers from mode collapse between experts, when several experts learn redundant behaviours under the classic balance loss.

Traditional MoE variants in diffusion models route each token (token-choice) or each expert (expert-choice) independently, which makes the number of active experts per token fixed. But in practice, some spatial regions or timesteps are harder than others! Yuan et al. propose Race-DiT that collapses all routing scores into a single giant pool and performs a global top-K selection:

Basically, every token competes with every other token across the whole batch, and every expert competes with every other expert—hence the term “expert race”. The general architecture thus becomes as follows:

This maximises routing freedom and allows the network to allocate more compute to “difficult” tokens (e.g., foreground pixels late in the denoising chain) while skipping easy ones.

They also add several interesting technical contributions:

- instead of only equalizing token counts, their router similarity loss minimizes off-diagonal entries of the expert-expert correlation matrix, encouraging specialists rather than redundant experts;

- per-layer regularization (PLR) attaches a lightweight prediction head to every Transformer block so shallow layers receive a direct learning signal.

Thus, Race-DiT shows that routing flexibility, not just parameter count, is the bottleneck when trying to scale Diffusion Transformers efficiently. Note also that their architectural ideas are orthogonal to backbone design and could be reused in other diffusion architectures with minimal changes.

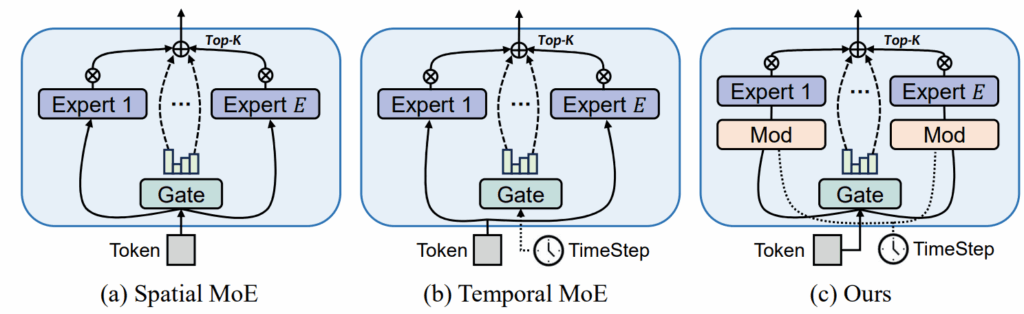

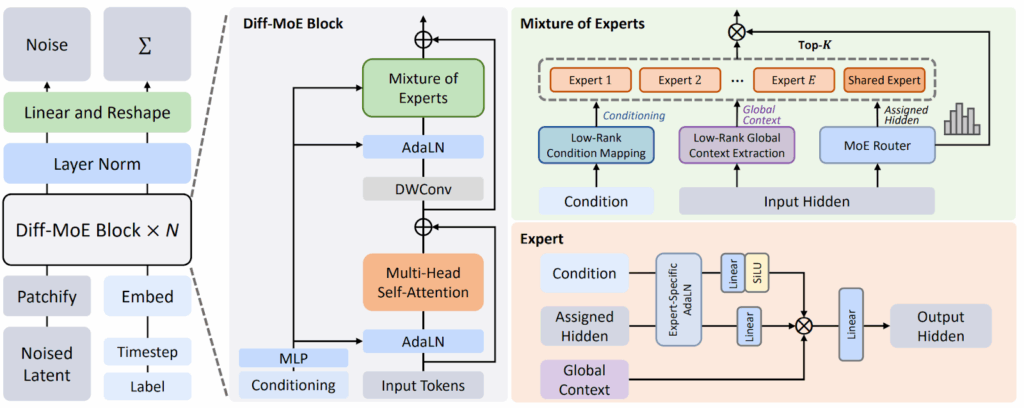

Diff-MoE. Chen et al. (2025) present the Diff-MoE architecture that augments a standard Diffusion Transformer (DiT) with a mixture-of-experts (MoE) MLP block whose routing is jointly conditioned on spatial tokens and diffusion timesteps. Specifically, they target the following problems:

- inefficient dense activation: vanilla DiT fires the full feedforward network for every token at every step;

- single-axis MoE designs: prior work slices either along time (Switch-DiT, DTR) or space (DiT-MoE, EC-DiT), but you can try to use both axes at once;

- lack of global awareness inside experts: token-wise experts can (and do) overfit to local patterns and miss long-range structure.

To avoid this, Chen et al. let every token pick a specialist expert and allow each expert to alter its parameters depending on the current denoising stage. The gate is conditioned on both current timestep and the token itself:

The Diff-MoE architecture, like the original DiT, first transforms the input into latent tokens (“patchify”) and then processes them through a series of Transformer blocks. But Diff-MoE replaces the dense MLP in the original DiT with a carefully designed mixture of MLPs, enabling both temporal adaptability (experts adjust to diffusion timesteps) and spatial flexibility (token-specific expert routing):

This is a very natural step: the authors just remove the “one-size-fits-all” bottleneck that forced existing DiT and MoE-DiT architectures to spend the same amount of compute on easy background pixels at early timesteps and on intricate foreground details near the end of the chain. Diff-MoE shows that the gate is able to learn to leverage joint spatiotemporal sparsity in a smarter way, leading to higher image fidelity and better sample diversity for a given computational budget. Again, the architectural design is a plug-and-play addition for any DiT-style backbone, and it may be especially interesting for videos where the diversity between timesteps is even larger.

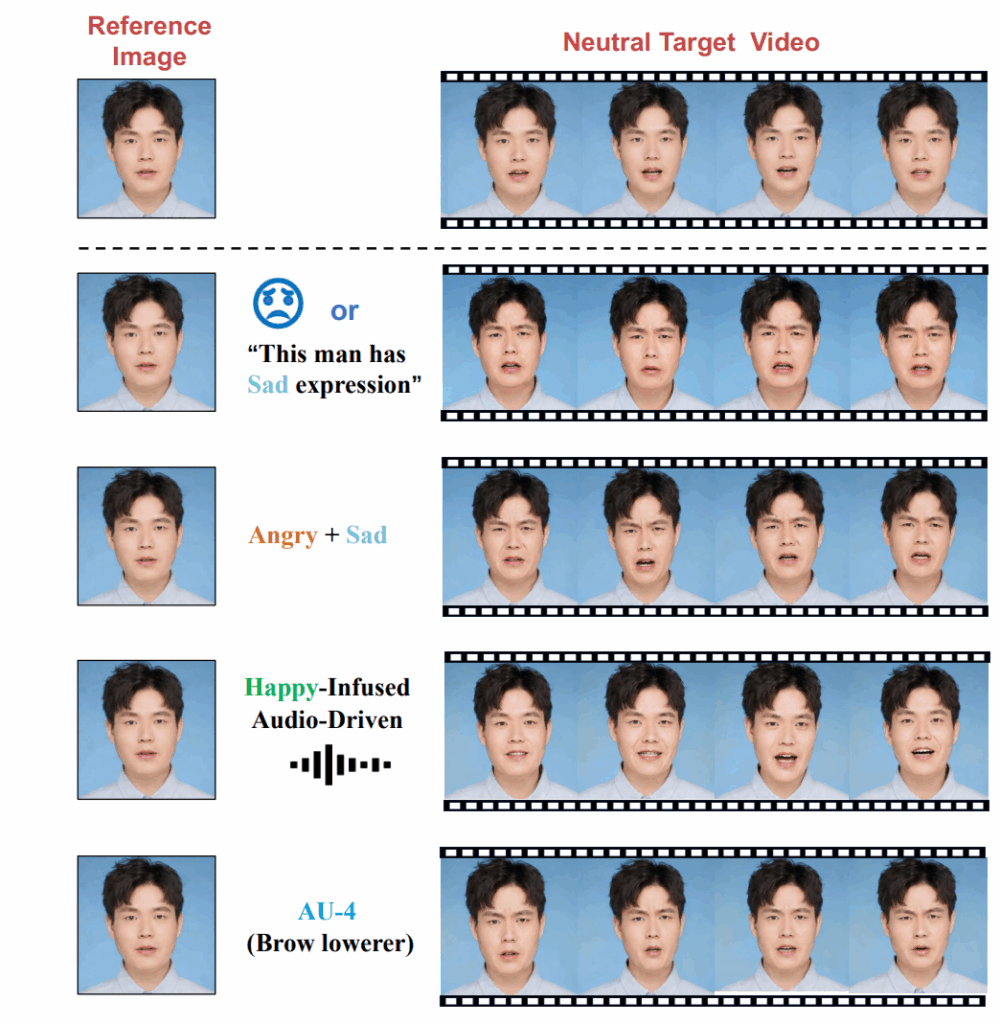

MoEE: Mixture of Emotion Experts. There are plenty of video generation models that can perform lip-sync very well. But most of them are “flat”: they produce either blank or single-style faces, or maybe generate some emotions but do not let the user control them, you get what the model thinks is appropriate for the input text.

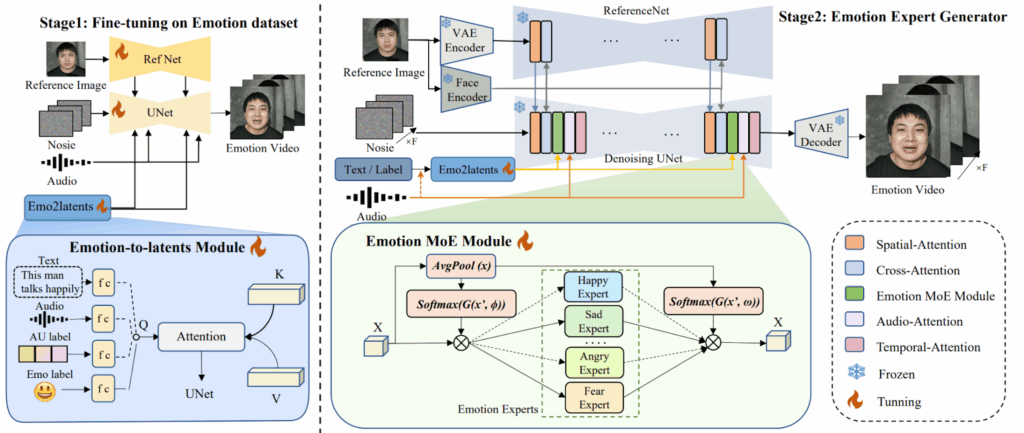

In a very interesting work, Liu et al. (2025) reframe the generation of talking heads as an emotion-conditional diffusion problem. That is, instead of a single monolithic model, MoEE trains and plugs in six emotion-specialist “experts”—one each for happiness, sadness, anger, disgust, fear and surprise—into the cross-attention layers of a diffusion-based U-Net:

A soft routing gate lets every token blend multiple experts, so the network can compose compound emotions (e.g. “happily surprised”) or fine-tune subtle cues. To train the model, the authors first fine-tune ReferenceNet (Hu et al., 2023) and denoising U-Net modules on emotion datasets to learn prior knowledge about expressive faces, and then train their mixture of emotion experts:

As a result, MoEE indeed produces emotionally rich videos significantly better than existing baselines; here is a sample comparison with MoEE at the bottom:

The interesting conclusion from MoEE is that mixture-of-experts is not only a trick for scaling parameters—it is also a powerful control handle! By tying experts to semantically meaningful axes (emotions in this case) and letting the router blend them, the model achieves richer, more natural facial behaviour while still keeping inference in real time. This approach is also quite generic, and you can try to combine it with many different pipelines and backbones.

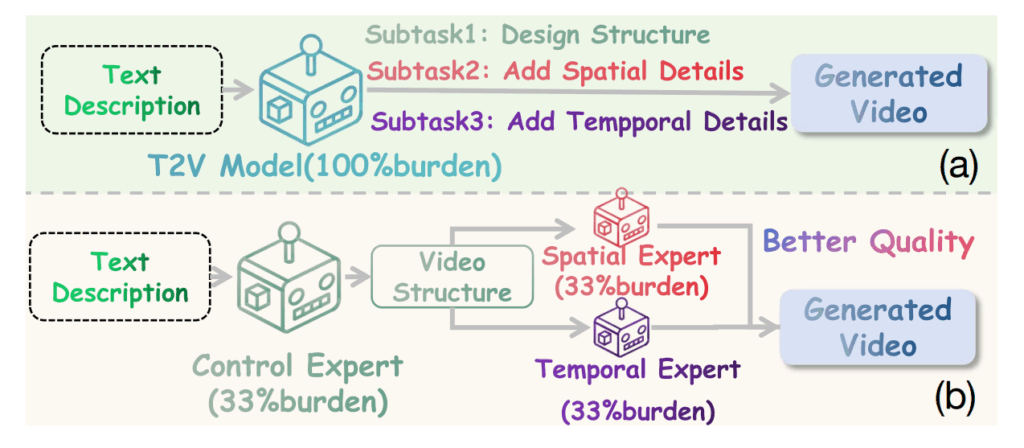

ConFiner. This work by Li et al. (2024) is not as recent as the others in this section, but it is a great illustration for the same idea: that experts can be assigned to semantic subtasks. Specifically, Li et al. posit that asking a single diffusion model to handle every aspect of text-to-video generation is a false bottleneck.

Instead, they suggest to decouple video generation into three explicitly different subtasks and assign each to an off-the-shelf specialist network (an “expert”):

- structure control with a lightweight text-to-video (T2V) model that will sketch a coarse storyboard, specifying things like object layout and global motion;

- temporal refinement, a T2V expert that focuses only on inter-frame coherence;

- spatial refinement, a text-to-image (T2I) expert that sharpens per-frame details and doesn’t much care about the overall video semantics.

Here Li et al. (2024) illustrate their main idea in comparison between “regular” video generation (a) and their decoupled structure (b):

And here is their ConFiner architecture in more detail; first the control expert generates the video structure and then temporal and spatial experts refine all the details:

In general, mixtures of experts are at the forefront of current research. And I want to finish this post with a paper that has just come out when I’m writing this, on July 14, 2025. It is a different kind of mixture, but it plays well with MoE architectures, and it might be just the trick Transformer architectures needed.

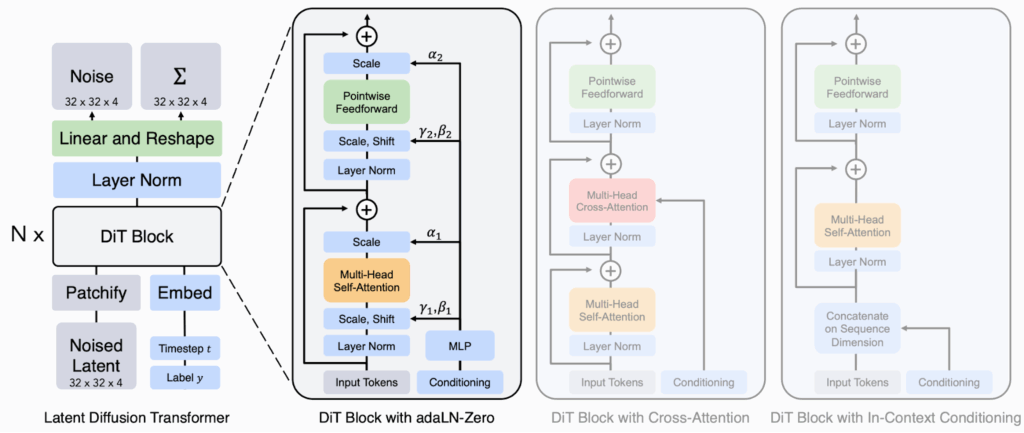

Beyond Traditional MoE: Mixture-of-Recursions

The last paper I want to discuss, by researchers from KAIST and Google Bae et al. (2025), introduces a new idea called Mixture-of-Recursions. This is a different kind of mixture than we have discussed above, so let me step back a little and explain.

Scaling laws have made it brutally clear that larger models do get better, but the ever increasing prices of parameter storage and per‑token FLOPs may soon become impossible to pay even for important problems. How can we reduce these prices? There are at least three different strategies that don’t need major changes in the underlying architecture:

- sparsity on inference via mixtures of experts that we are discussing here;

- layer tying that shrinks the number of parameters by reusing a shared set of weights across multiple layers; this strategy has been explored at least since the Universal Transformers (Dehgani et al., 2018) and keeps appearing in many architectures (Gholami, Omar, 2023; Bae et al., 2025b);

- early exiting where inference stops on earlier layers for simpler tokens; again, this is an old idea going back to at least depth-adaptive Transformers (Elbayad et al., 2020) and evolving to e.g., the recently introduced LayerSkip (Elhoushi et al., 2024).

Prior work normally attacked one axis at a time, especially given that they target different metrics: tying weights saves memory but not inference time, and sparse activations or early exits do the opposite. MoR argues that you can, and should, solve both problems in the very same architectural move.

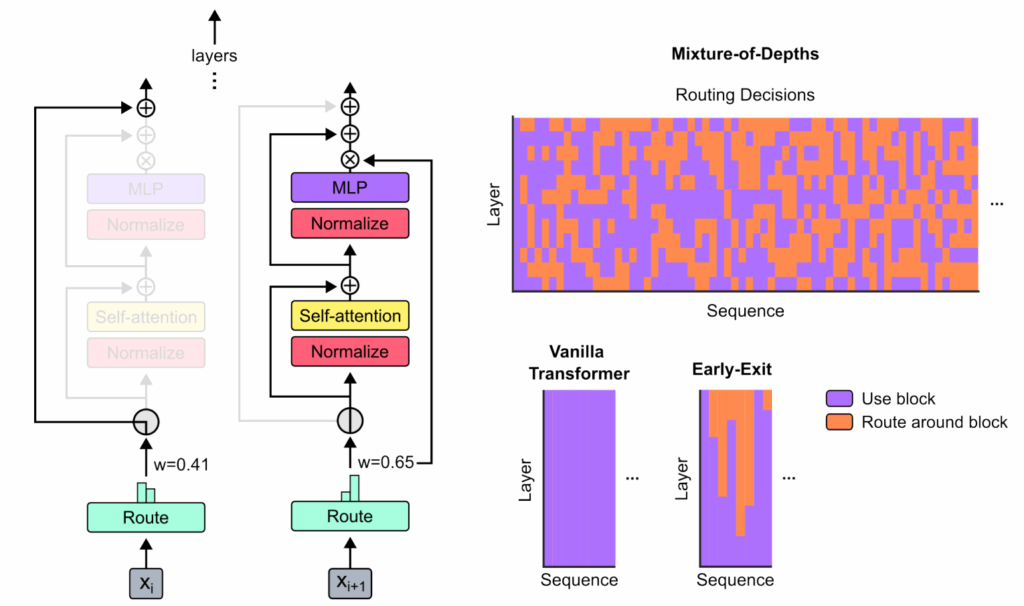

Another direct predecessor of this work was the Mixture-of-Depth idea proposed by Google DeepMind researchers Raposo et al. (2024). They introduced token-level routing for adaptive computation, while extending the recursive transformer paradigm in new directions. Specifically, while MoE models trains a router to choose between different subnetworks (experts), Mixture-of-Depth trains a router to choose whether to use a layer or go directly to a residual connection,

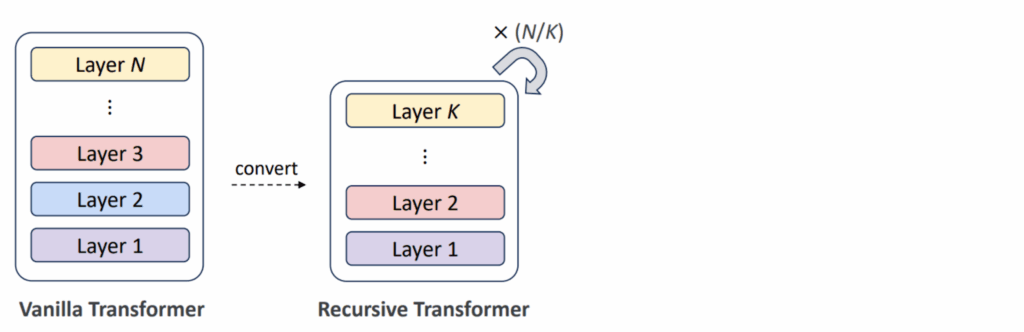

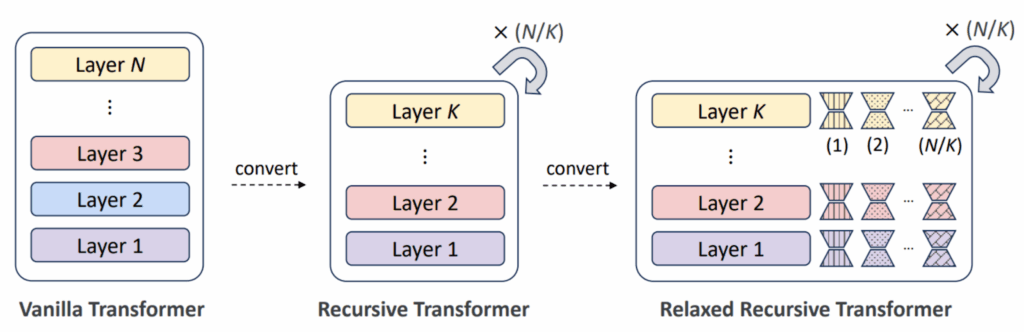

When you combine layer tying and early exits, you get Recursive Transformers (Bae et al., 2025b), models that repeat a single block of K layers multiple times, resulting in a looped architecture. Instead of having 30 unique layers, a recursive model might have just 10 layers that it applies three times, dramatically reducing the parameter count and using an early exit strategy to determine when to stop the recursion:

But what would be the point of reapplying the same layers, you ask? An early layer used to deal with the tokens themselves would be useless when applied to their global semantic representations higher up the chain. Indeed, it would be better to have a way to modify the layers as we go, so the Relaxed Recursive Transformers add small LoRA modifications to the layers that can be trained separately for each iteration of the loop:

Bae et al. (2025b) found that their Recursive Transformers consistently outperformed standard architectures: when you distill a pretrained Gemma 2B model into a recursive Gemma 1B, you get far better results than if you distill it into a regular Gemma 1B, close to the original 2B parameter model.

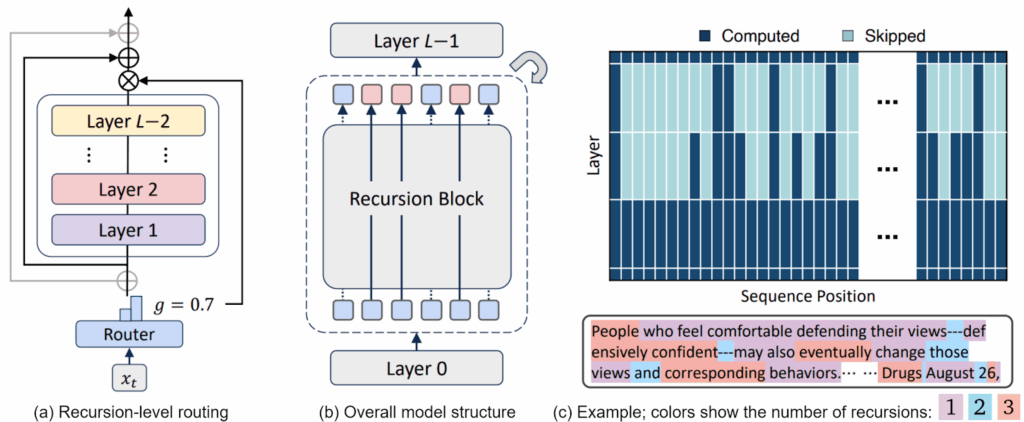

But if the researchers stopped there, this paper would not belong in this post. Bae et al. (2025) introduced intelligent routing mechanisms that decide, for each token individually, how many times to apply the recursive blocks. This is why it’s called “mixture-of-recursions”: lightweight routers make token-level thinking adaptive by dynamically assigning different recursion depths to individual tokens. This means that simple function words might pass through the recursive block just once, while semantically rich content words might go through three or more iterations.

In the illustration below, (a) shows the structure of the router that can skip a recursion step, (b) shows the overall model structure, and (c) illustrates how more straightforward tokens get produced by fewer recursion steps than more semantically rich ones:

The idea is to get each token exactly the amount of processing it needs—no more, no less. The model learns these routing decisions during training, developing an implicit understanding of which tokens deserve deeper processing.

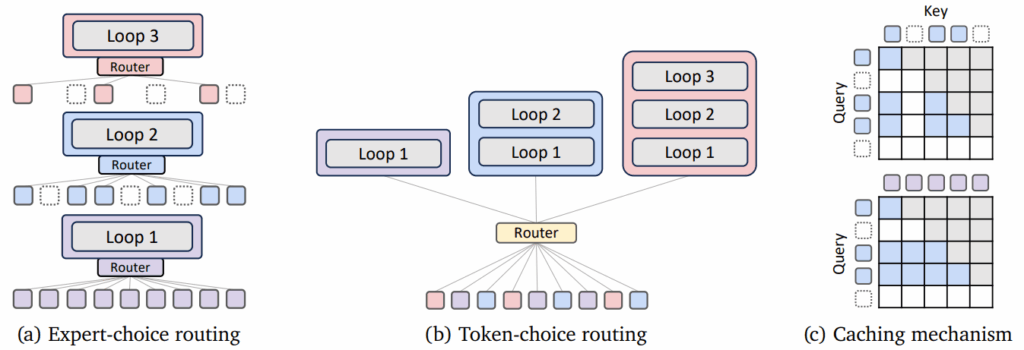

The authors explore two strategies for implementing this adaptive routing:

- expert-choice routing treats each recursion depth as an “expert” that selects which tokens it wants to process; this approach guarantees perfect load balancing since each loop iteration processes a fixed number of tokens, but it requires careful handling of causality constraints to work properly with autoregressive generation;

- token-choice routing takes a simpler path: each token decides upfront how many recursions it needs; while this can lead to load imbalance (what if all tokens want maximum recursions?), the authors show that balancing losses can effectively mitigate this issue.

Here is an illustration of the two routing schemes together with two caching mechanisms proposed by Bae et al. (2025), recursion-wise KV caching that only stores KV pairs for tokens actively being processed at each recursion depth (top) and recursive KV sharing that reuses KV pairs computed in the first recursion for all subsequent steps (bottom):

In practice, both routing approaches yield strong results, with expert-choice routing showing a slight edge in the experiments; recursive KV sharing is faster but more memory-hungry than recursion-wise KV caching.

The empirical results demonstrate that MoR is not just theoretically elegant—it delivers practical benefits. When trained with the same computational budget, MoR models consistently outperform vanilla Transformers in both perplexity and downstream task accuracy, while using roughly one-third the parameters of equivalent standard models. The framework also leads to significant inference speedups (up to 2x) by combining early token exits with continuous depth-wise batching, where new tokens can immediately fill spots left by tokens exiting the pipeline.

Interestingly, MoR also naturally supports test-time scaling: by adjusting recursion depths during inference you can set the tradeoff between quality and speed. In a way, MoR is a natural foundation for sophisticated latent reasoning: by allowing tokens to undergo multiple rounds of processing in hidden space before contributing to the output, MoR makes the model “think before it speaks”, which could lead to more thoughtful and accurate generation.

In general, this set of works—Mixture-of-Depths, recursive Transformers, Mixture-of-Recursions—seems like a very promising direction for making large AI models adaptive not only in what subnetworks they route through, like standard MoE models, but also in how much computation they use.

Conceptually, MoR is a vertical Mixture‑of‑Experts: instead of spreading tokens across wide expert matrices, we send them deeper into time‑shared depth. A combination of MoR and MoE would be straightforward, and perhaps a very interesting next step for this research, and it plays nice with the growing bag of KV‑compression tricks. I would not be surprised to see a “3‑D sparse” model—sequence times depth times experts—emerging from this line of work soon.

Conclusion

In these two posts, we have completed a brief journey through the landscape of mixture of experts architectures. Today, we have seen that for vision and multimodal tasks the simple principle of conditional computation has also led to a remarkable diversity of approaches, from V-MoE’s pioneering application to Vision Transformers, through the sophisticated temporal reasoning of CogVideoX and the mathematical elegance of variational diffusion distillation, to the emergent specialization in multimodal models such as Uni-MoE. We have seen how routing to experts adapts to the unique challenges of each domain.

Several key themes emerge here. First, the concept of sparsity is far richer in visual domains than in text: we have spatial, temporal, and semantic sparsity that can be explored independently or in conjunction. Second, the routing mechanisms themselves have evolved from simple top-k selection to sophisticated schemes that consider global competition (Expert Race), joint spatiotemporal conditioning (Diff-MoE), and even semantic meaning (MoEE, ConFiner). Third, combinations of MoE with other architectural innovations such as diffusion models, variational inference, or recursive Transformers, show that mixtures of experts are not just a scaling trick but a fundamental design principle that combines beautifully with other ideas.

Perhaps most excitingly, recent work like Mixture-of-Recursions hints that different efficiency strategies—in this case sparse activation, weight sharing, and adaptive depth—can be united in single architectures. These hybrid approaches suggest that we are moving beyond isolated optimizations toward holistic designs that are sparse, efficient, and adaptive across multiple dimensions simultaneously.

But perhaps the most interesting implication of these works is what they tell us about intelligence itself. The success of MoE architectures across such diverse domains suggests that specialization and routing—having different “experts” for different aspects of a problem and knowing when to consult each—is not just an engineering optimization but a fundamental principle of efficient information processing. Just as the human brain employs specialized regions for different cognitive tasks, our artificial models are learning that not every computation needs every parameter, and not every input deserves equal treatment. The more information we ask AI models to process, the more important it becomes to filter and weigh the inputs and different processing paths—and this is exactly what MoE architectures can offer.

Sergey Nikolenko

Head of AI, Synthesis AI